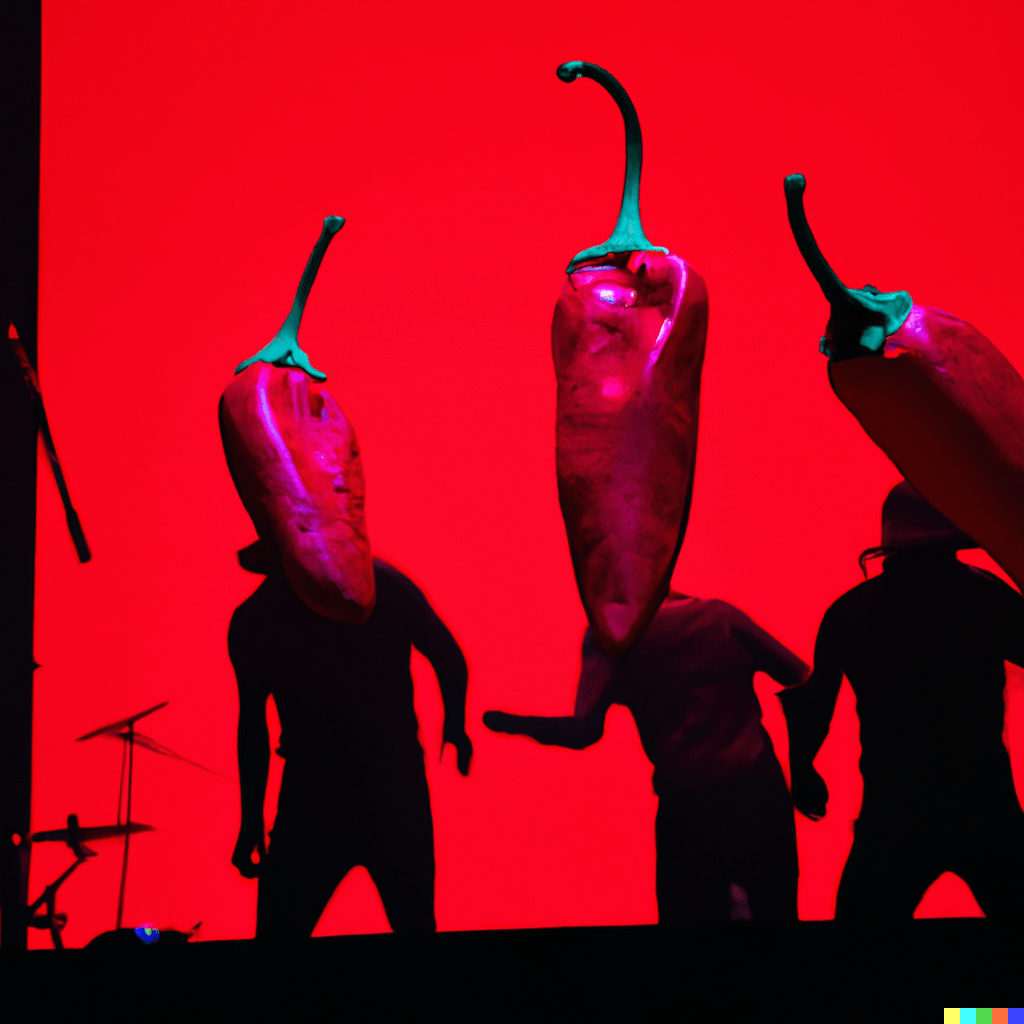

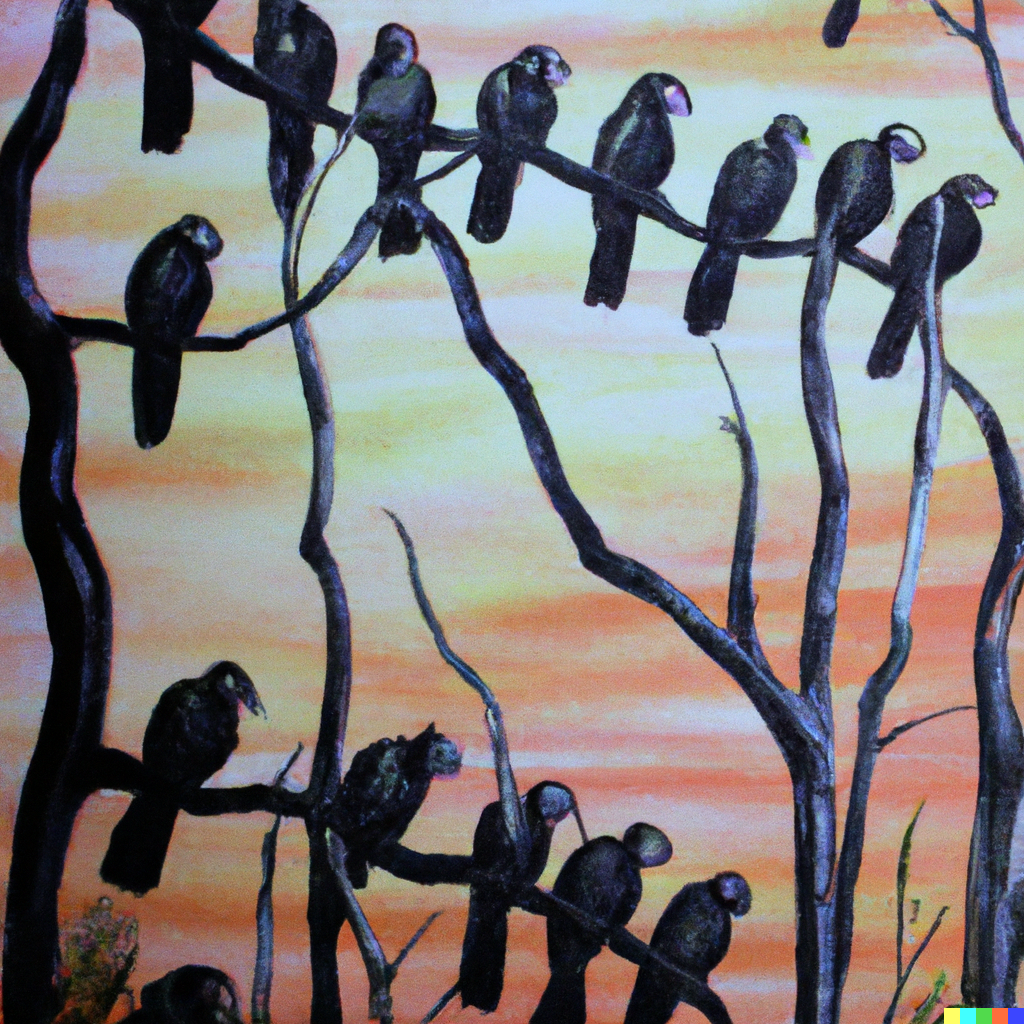

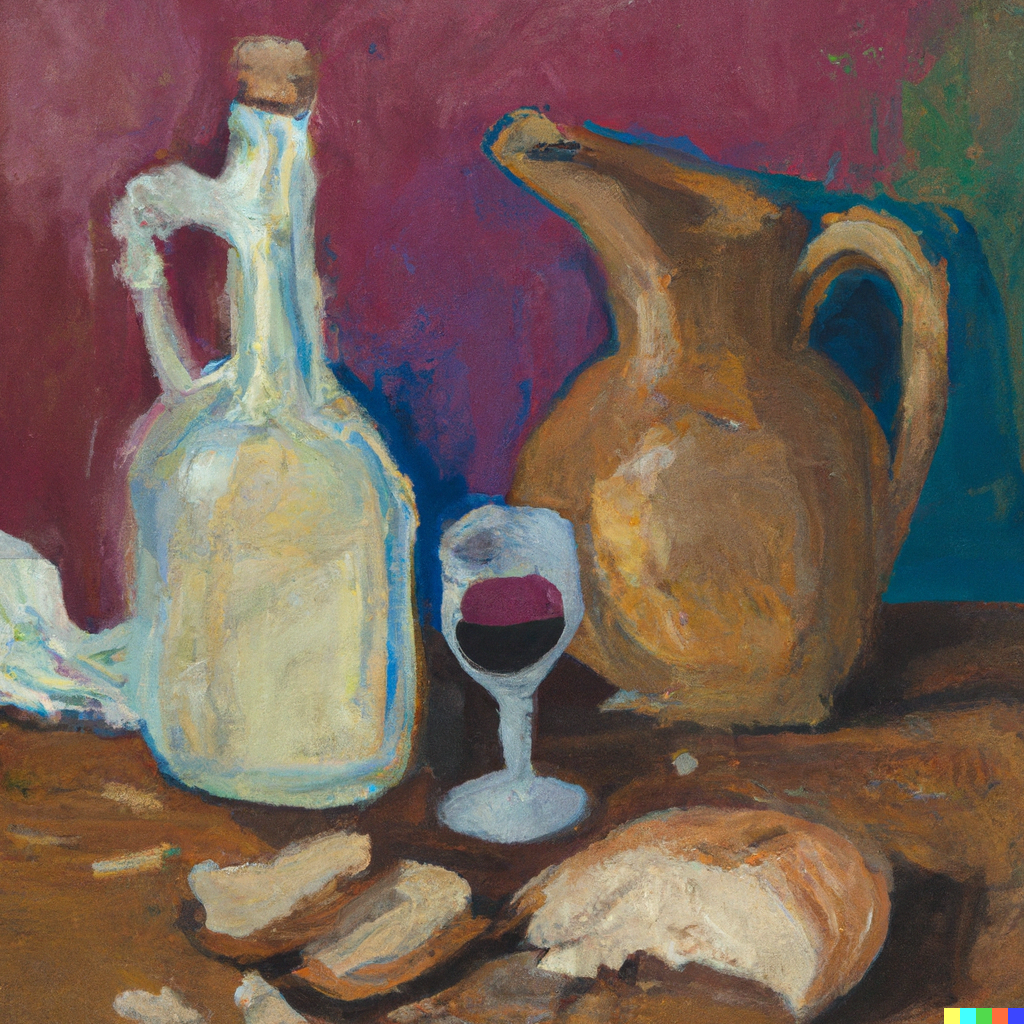

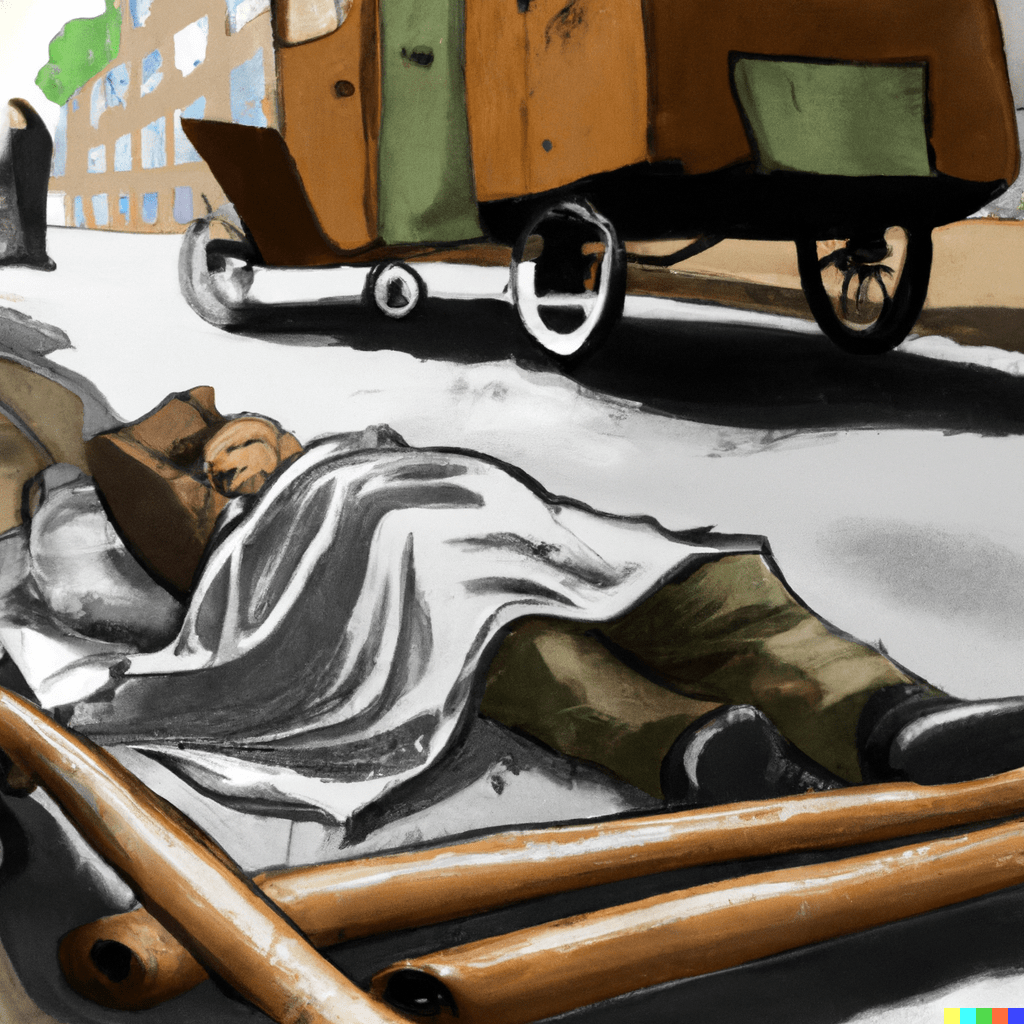

30 Days of DALL·E

Observations and output from a month of learning the DALL·E AI image generator.

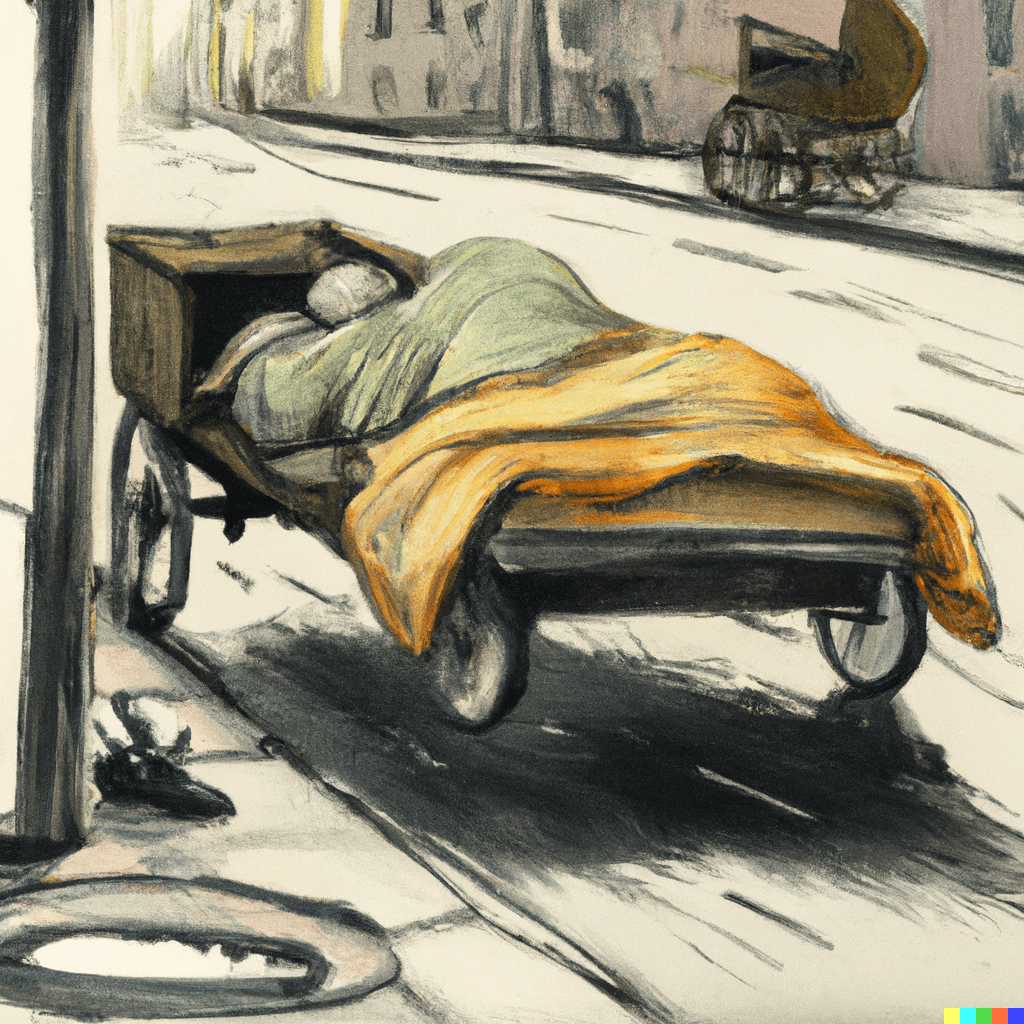

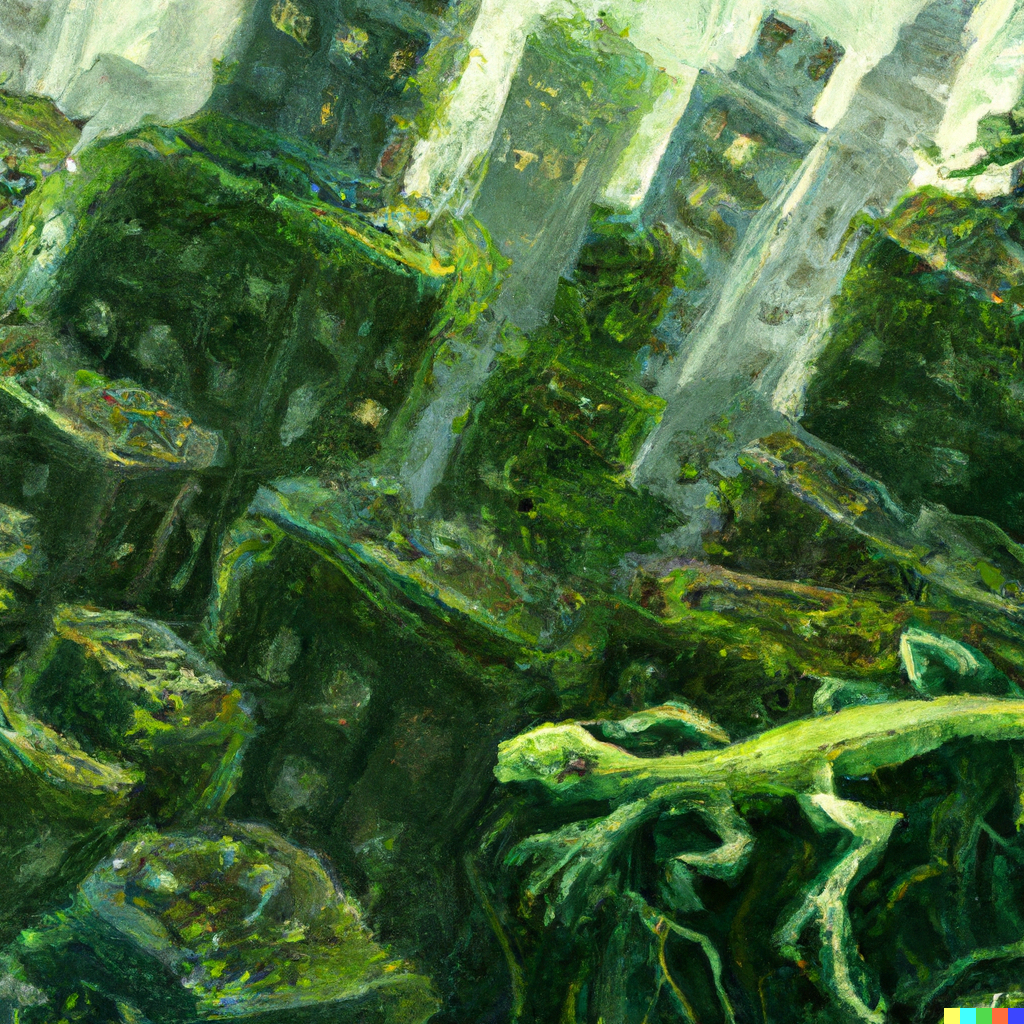

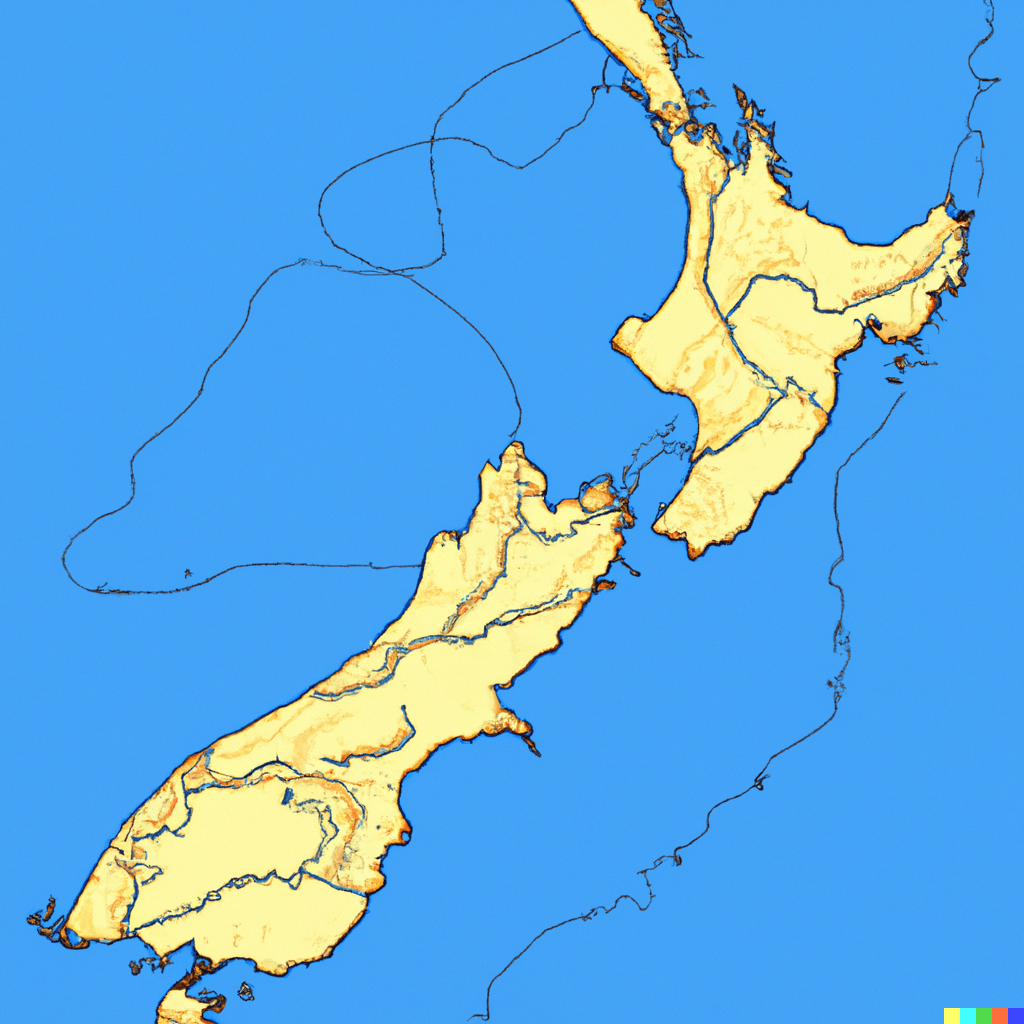

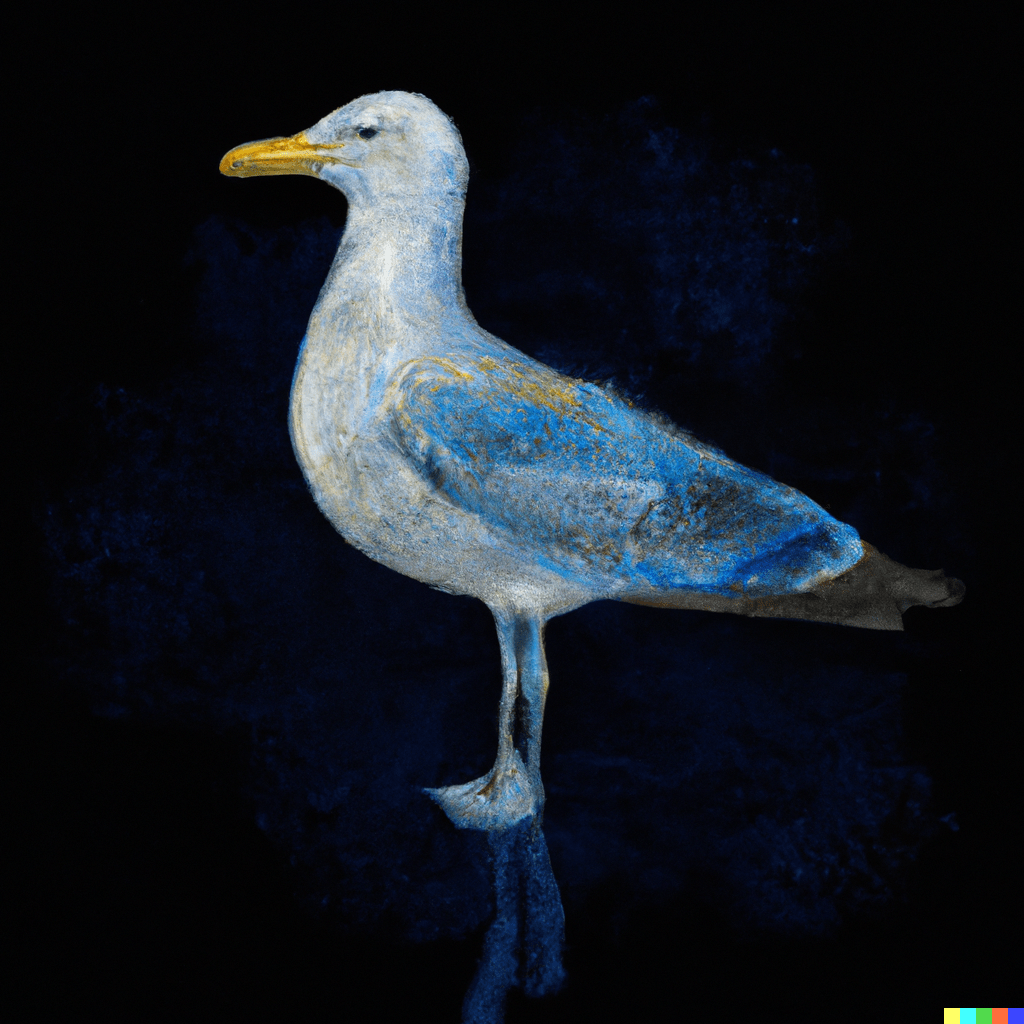

DALL·E is arguably the most advanced AI image generator available. With a short description or prompt of an intended image, it can create four completely original works within seconds. From realistic photographs to abstract paintings and everything in between.

I was blown away when I saw the quality and detail of DALL·E. The implications of AI-imagery on creative industries instantly dawned on me. Illustrators, photographers, physical and digital artists, web and product designers, film and game producers, animators, marketers, etc.

Take a case study of commissioning a digital illustration for editorial use. Currently an agency would search through artists for the right aesthetics, write a brief, negotiate a price and timeline, wait for the first draft, provide feedback, wait for revisions, then pay the bill. This whole exercise, which once cost thousands in resources, can now be done in ten minutes for a dollar or two.

I decided to learn everything I could immediately about how the tech works and how I can adapt, utilise, and integrate it in my work. If you are in one of the industries above, I recommend doing the same.

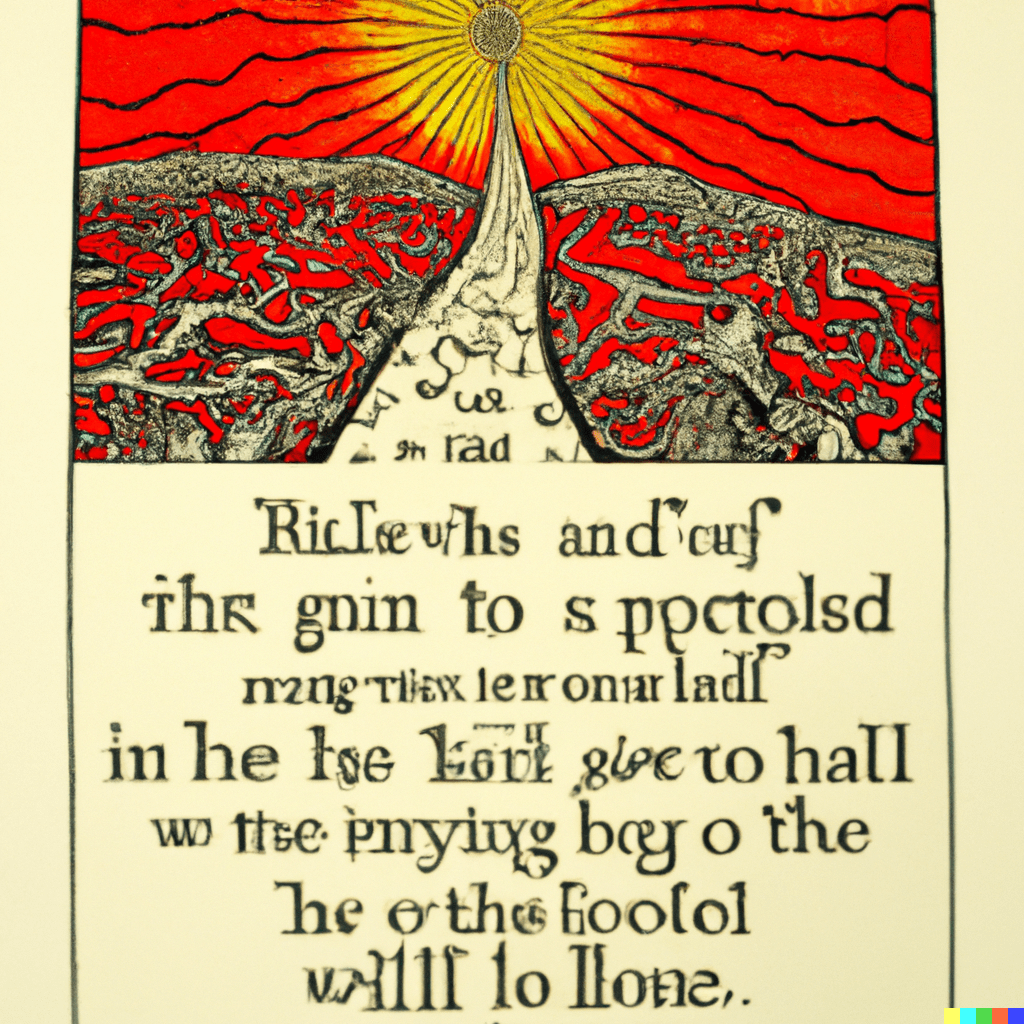

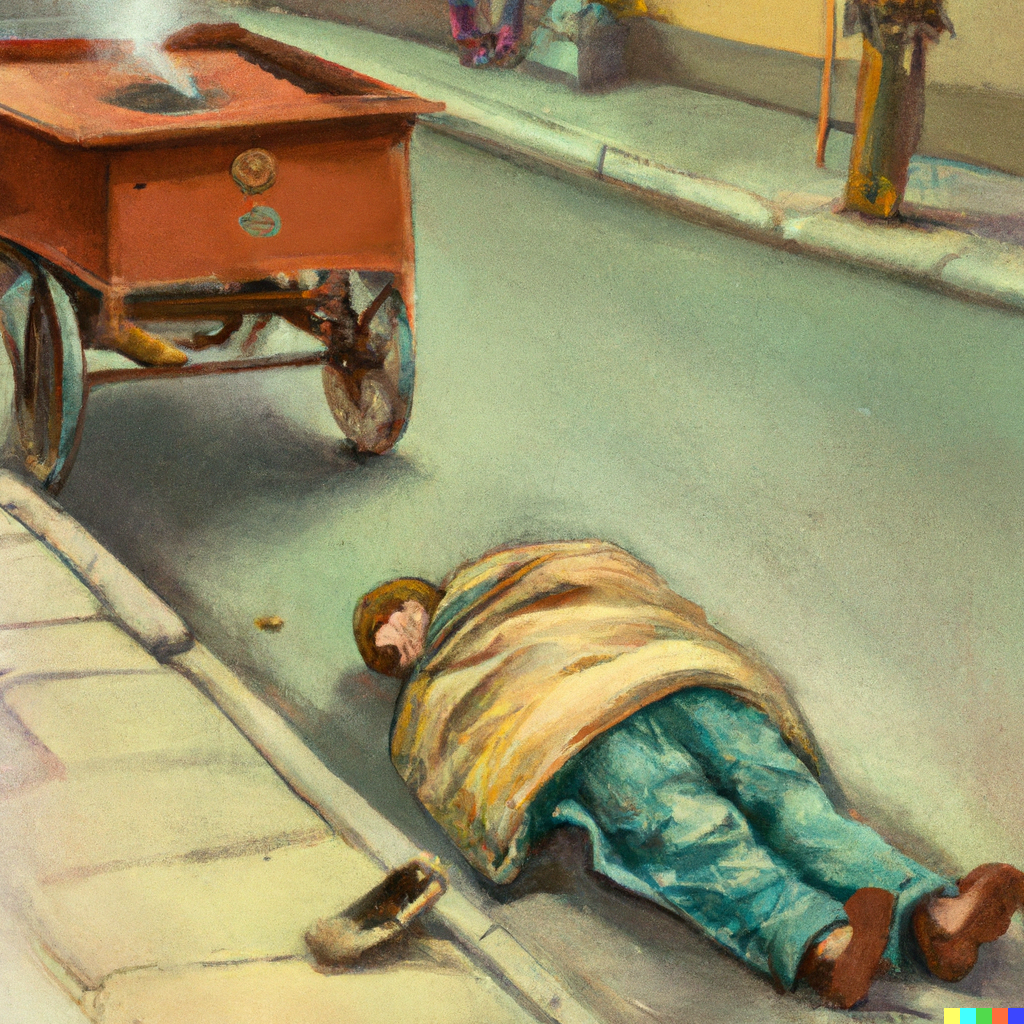

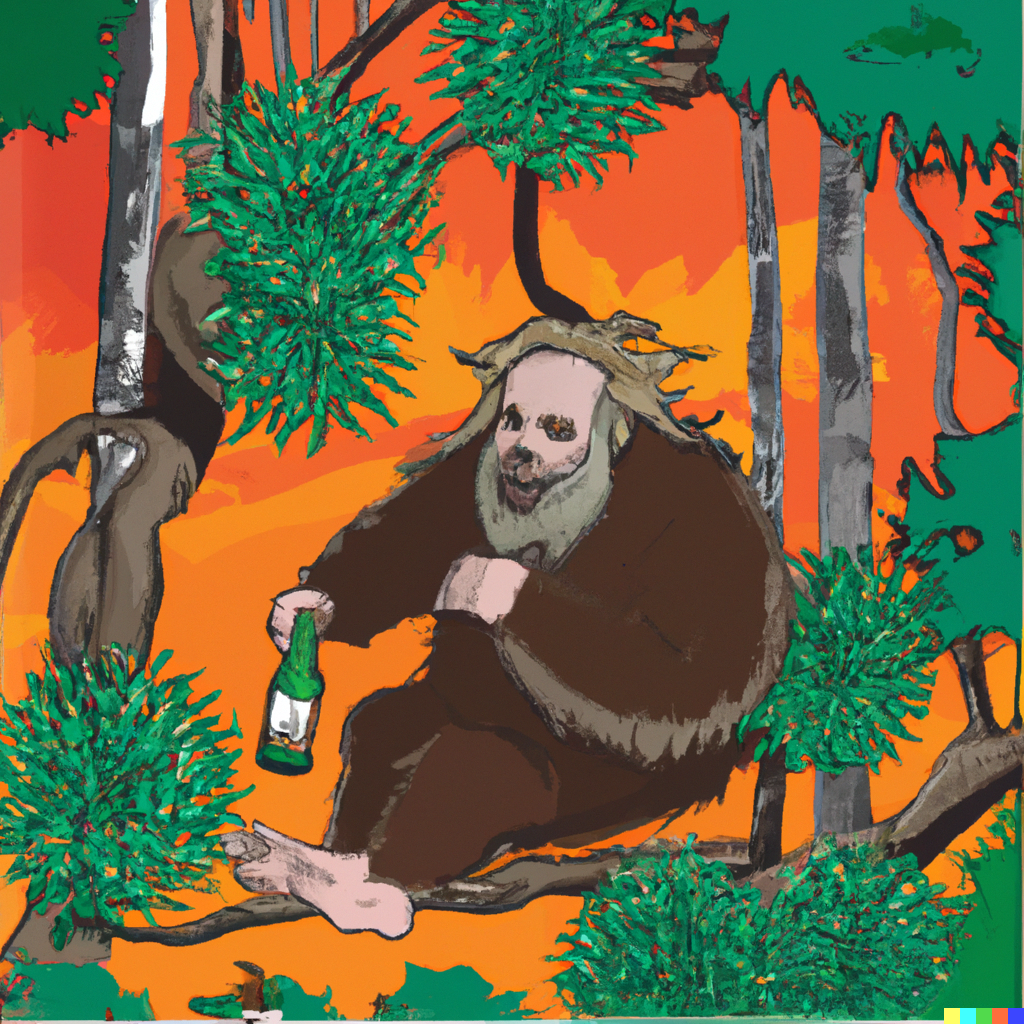

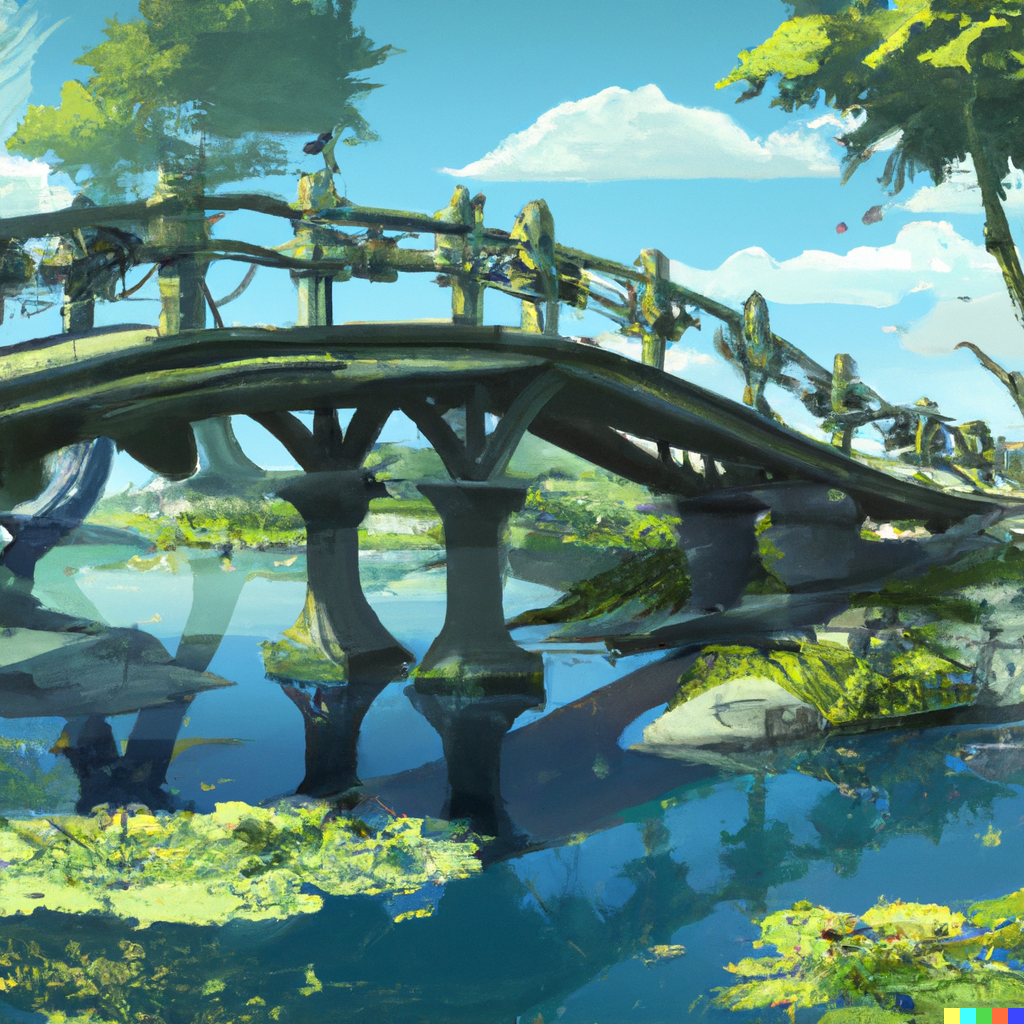

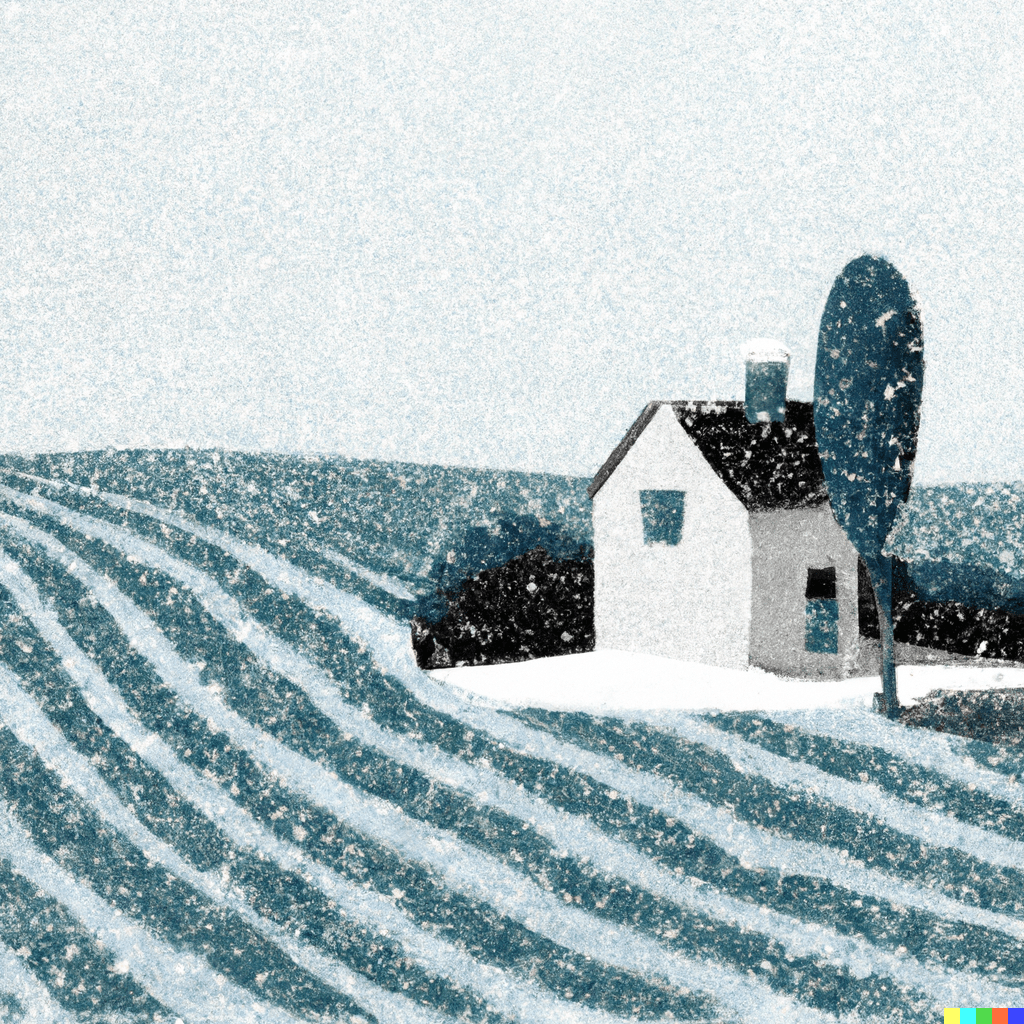

So, every day in August, I created a new DALL·E image. I tried experimenting with a variety of subjects, themes, and styles, to get a broad understanding of capabilities and shortcomings.

Before starting, I thought of DALL·E as a useful commercial tool that could not replace real art and its exploration of the human condition and beyond (the divine, sublime, infinite, eternal). Now I am not so sure. Many pieces are unexpected, thought-provoking and awe-inspiring. Considering that DALL·E knows only what we have taught it, the outputs provide a deep reflection of us and the human experience through the lens of visual language.

A few more thoughts

Observations

The notes below only apply to my experience with DALL·E. There are other AI image generators with different strengths and weaknesses, including Stable Diffusion, Midjourney, Google Parti, Jasper Art, et. al.

Ideas/Vibes > Specific Visions. When I had a precise outcome in mind, I was usually disappointed. DALL·E needs a whole dartboard to aim at, not just the bullseye. Some overly specific prompts that I could not get right were: “one small violet flower in the middle of an expansive sandy desert” and “a church with paint oozing down the walls and becoming colourless”.

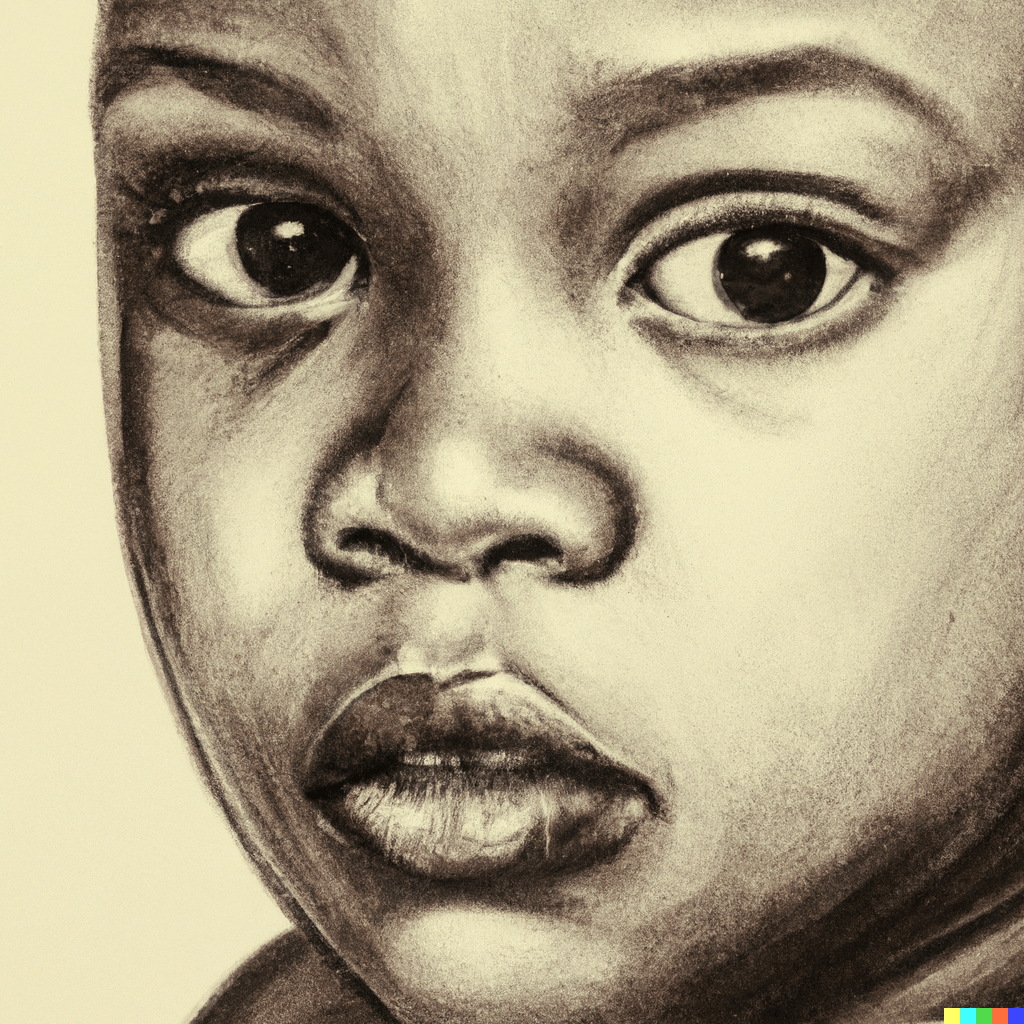

It struggles with faces. Attempted portrait photographs with human faces often have wild deformities. Horror movie shit. I have seen amazing counter-examples, though, so this could have been bad prompts from my end.

Scale is finnicky. At times I needed to be very specific about how large or small something should be. For example, when asking for "a mango tree beneath a vibrant rainbow" none of the pictures showed the complete tree – only a closeup of the branches.

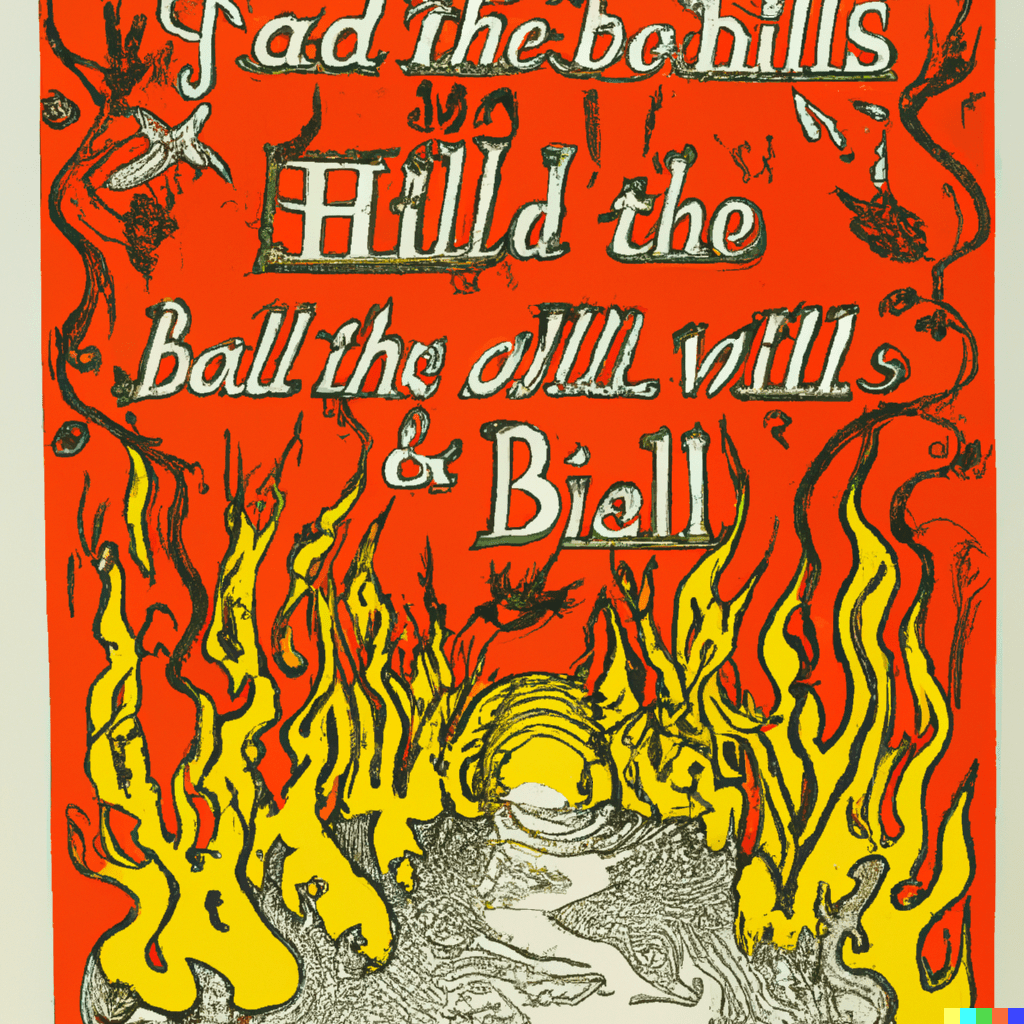

It doesn't do words. . Whenever text is in the output image (i.e. for a cartoon strip or newspaper ad), it will usually be in some weird old-English latin jibberish language. I'm unsure whether this is intentional or not. I assume it is.

It struggles with things it hasn't seen before. . I threw a few curve balls; uncommon or unlikely things like "a bridge that connects two ponds, arching over land". It produced only what it was familiar with – ordinary bridges that connected land over water.

Other capabilities

I only experimented with DALL·E’s image generation via text prompts. Other capabilities out there now or on the horizon include:

- Sketch-to-image. Along with a text prompt, provide a rough sketch (think MS Paint quality) to give the engine a clearer starting point.

- Mending and manipulation (inpainting). Photoshop editing capabilities via text prompt. Touch up, replace, tweak any part of an existing image.

- Outpainting. Draw out from a seed image to change the context, environment, or level of detail, and create massive, expansive pieces.

- Character generation. Create human-like fakes or new fantasy characters for animated films or video games.

- GenArt + AI. Combine traditional generative art with AI-image engine to get crazy results.

- Photoshop and Figma plugins. Combine all of these features right inside one app. Here’s a sneak peek.

- Video. This prompt will soon work: “15 second video of three polar bears enjoying a Lipton Iced Tea in the sun”. There will be AI-generated films in cinemas. But we’re not there yet.

Ethics

There are ethical concerns around using artists’ names directly in prompts without permission. Particularly for living artists. Also, An AI-Generated Artwork Won First Place at a State Fair Fine Arts Competition.

For me, it makes sense to clearly delineate what is AI and what is human generated. Every AI-generated image should carry some immutable metadata saying exactly what it is – for transparency, equality, and to bury the bullshit before it flies.

Beyond images

DALL·E’s AI engine, GPT-3, is all about natural language tasks. It’s already very capable and can copywrite, summarise, classify, and translate. You can use this now to make marketing material for your company, summarise lengthy articles, or as a brainstorming friend/scratchpad.

OpenAI is also building a prompt-to-code package, meaning developers might be working themselves out of a job. Or at least outsourcing mundane code to AI.

AI-generated music is also a thing. Personal choice, but I’m not paying attention yet. Nothing has wowed me in that space so far.

It's now time to level-up your understanding, practice prompt-writing, and invent new ways to apply this technology.

Resources

- Lexica – search a database of already-written prompts (good you’re out of free credits elsewhere).

- The DALLE 2 Prompt Book

- List of AI art image synthesis tools

Gallery